ERP is critical software and the chances of ERP failures are always with it. ERP is a ballgame, not many people play it well. But, what is even worse, some don’t know they aren’t playing well, there are many reasons for ERP failures. Choosing an ERP solution is another ballgame, whose story is no different. Many of us must have read articles on how to choose an ERP, what parameters to look for while approaching vendors, partners, or solution providers, and what common ERP failures needed to keep an eye on. But not many emphasized the commonplace misconceptions or mistakes made while choosing an ERP. Let’s address a few common mistakes made when an organization chooses an ERP.

- Engaging The Essential Resources:

Companies fail to understand the importance of an ERP implementation project and possible ERP failures. Many times, the right resources are not included. Organizations do not plan well in advance for the activities and tasks needed through the implementation. The solution provider is well equipped with all the tools to be able to deliver as per the timeline, but companies need to reciprocate likewise as well. All of this results in the quality of implementation delivery and removes the chances of ERP Failures. And then the solution provider, or at times the ERP technology takes the blame.

The best way to avoid ERP failures from happening is to set priorities right from the beginning. Organizations should engage crucial team members from the very start. ERP tools touch all the forefronts of your company, so it is essential to have people from all the various departments participate in the process.

Some ERP vendors claim to have their products easy to install, and once installed the company can run on it flawlessly. Well, it never really works that way. The next common misconception we’ll talk about is the implementation timeline.

Companies think of ERP solutions as a plug and play tools. ERPs are systems that need to be designed for the company, as per requirements. This is not a one-day job, it takes time and resources. Huge implementations can go up until 3 quarters of a year. if done methodically. Solution partners take resort to implementation methodologies, many times it is technology-specific. The project is divided into phases, with intermediate milestones.

Teams are deployed, with dedicated skill sets for niche tasks in the implementation. Resource allocation from either end is essential. Dedicated resources from the organization are a must, they can work with the solution partners throughout the implementation tenure. Changing the resource from time to time leads to the implementation of ERP failures.

Different organizations have different business processes, different scenarios, and different needs from their ERP. But organizations fail to understand that each ERP is built based on some best practices across the industry. Implementation partners will help to bridge the niche need, but the vast majority of business processes should be following best practices. Many ERPs have the best practices standardized, these are moduled or bundled along and can be deployed as per customer needs. Companies must always remember, less customization equals less possibility of breakage. Implementations that are more inclined toward best practices are more successful compared.

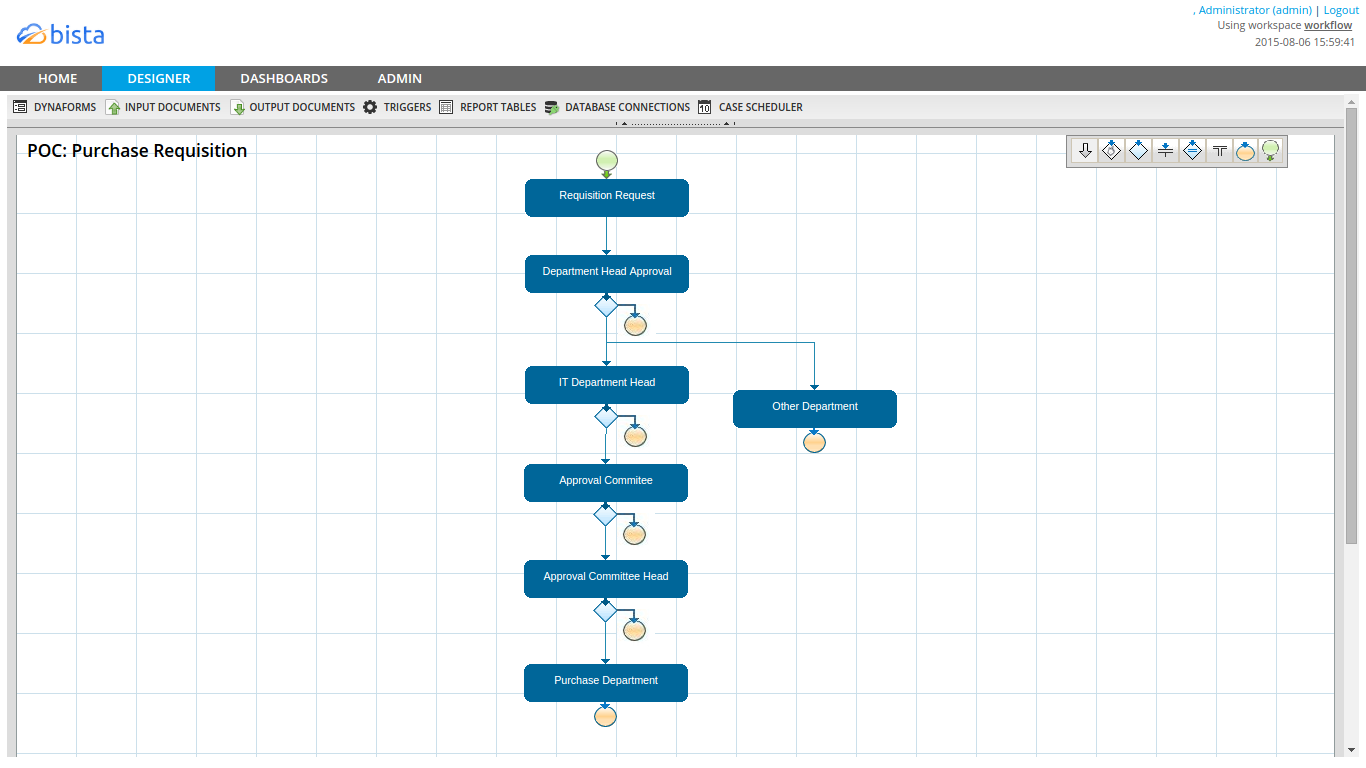

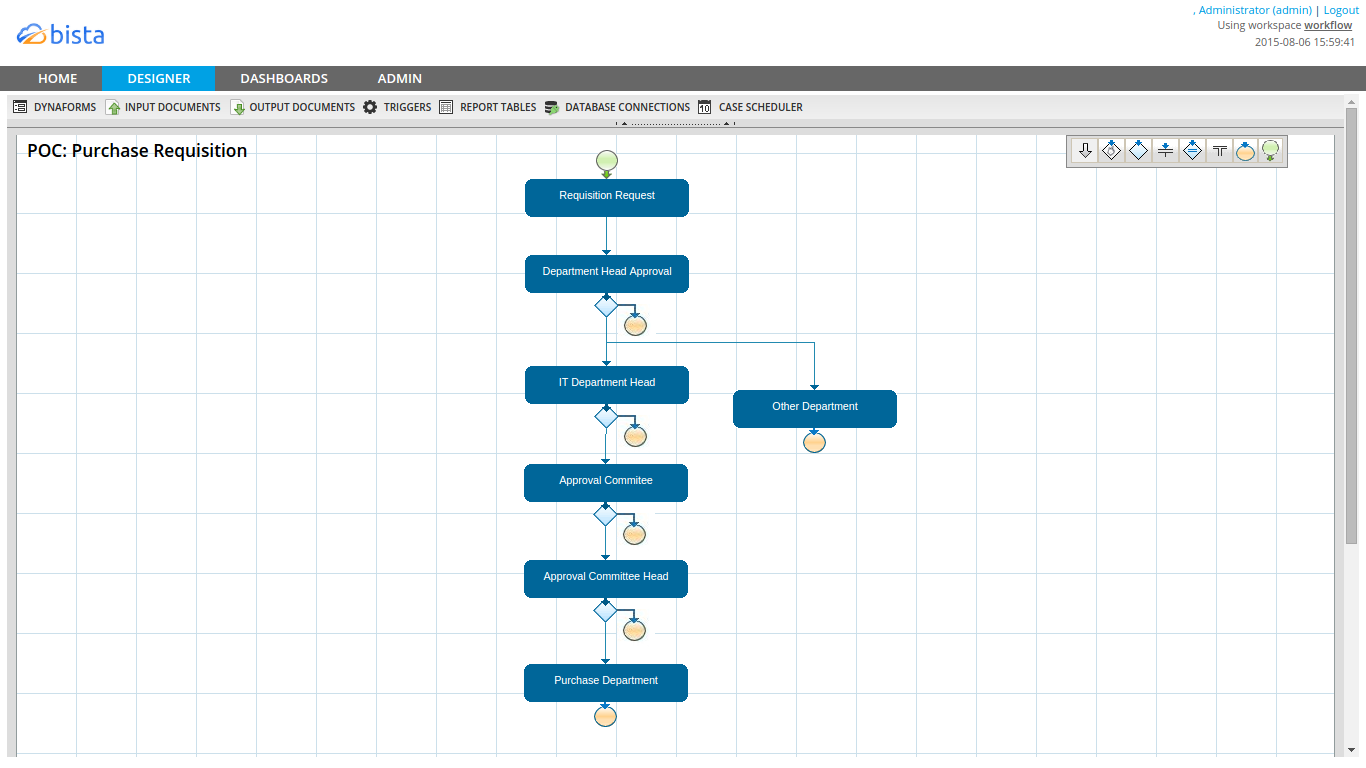

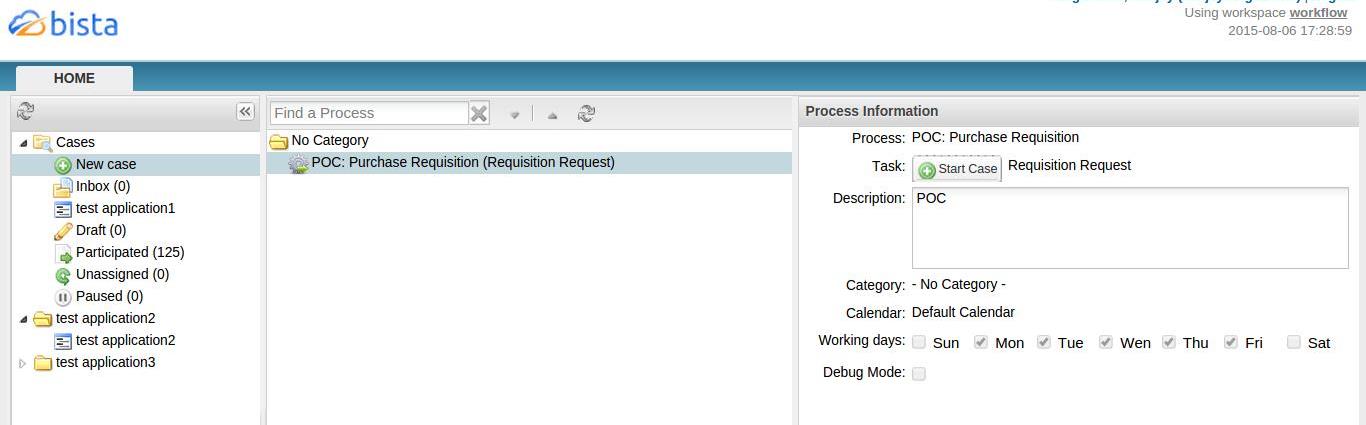

Today’s business runs at the speed of thought. And if the ERP fails to catch up, you always end up with one wheel behind the finish line. Speed is a relative term, and for many ERPs, it’s dependent (on server capacities, locations, etc). Organizations typically fall prey to the speed and agility demonstrated by the vendor or solution provider. The demos presented are generally hardwired and preset on servers or local machines. It thereby becomes difficult to understand the true ability of an ERP. The best way to tackle it is to run a trial instance yourself. A real-life demo!

Solution providers can enable organizations with trial versions that can run for a couple of weeks. Organizations can set up basic stuff there and learn whether the speed is up to the mark.

Companies capture massive confidential data. This can be customer credit card credentials or their financials for that matter. But while choosing an ERP, many times they overlook the need of having a secure system to handle the data as cleanly as it should.

Companies need to choose a secure ERP. Some systems can be hosted locally, on the premises. In such scenarios, data security is often overlooked. Some ERPs come with very secure database backing. Surprisingly some cloud-based solutions are more secure than on-premises systems. There are ERP vendors which claim to be as secure as any banking system. Companies must not miss checking the security norms of ERPs before implementation.

The total cost of ownership of any product, especially that of an ERP system, has many components. Some ERPs can be completely owned by the company, while some are sold based on the subscription model. Today is the day of cloud ERPs, which dramatically reduce the TCO of the organizations owning it. Many companies miss computing the TCO for an ERP before investing in it. This is something all companies must do.

An attractive TCO will also get your return on investment sooner and will make it look much better compared to a very short period. Today’s cloud offerings claim to get a healthy ROI within months.

Many ERP products provide after-sales support. Not just that, many implementers do that too. It is essential to choose your support channel wisely. Most of the time, the product company provides product support and fails to cope with the implementation specifics. Moreover, they charge a fortune for each ticket. This is a commonplace problem. As opposed, the implementation companies also provide after-sales support. If need be, they also have some reservations about the production company itself. It is more advisable to go with your implementation partner for support, as he is the one who knows your ERP better than any other.

Customers tend to think, if we hire expert implementers we won’t need test environments. This is a myth! Test environments are not just for implementation partners to test. This is where the company can test during the acceptance testing, the future phases can be rolled out, the sample data can get in, and the users can be trained. If you are planning to do away with the test environment, you’ll have to compromise on a lot.

Test environments are typically instances wherein the implementation of mock-ups can be configured. Maintaining a backup of the customizations becomes so easy with the test environments in the picture. Cloud ERPs of the day have compatibility to fully replicate the test environment as per your live instance.

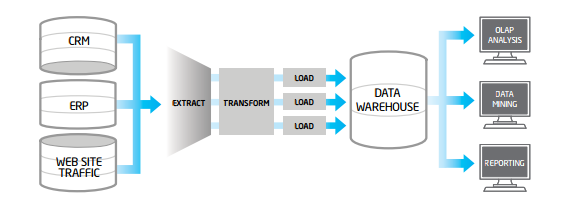

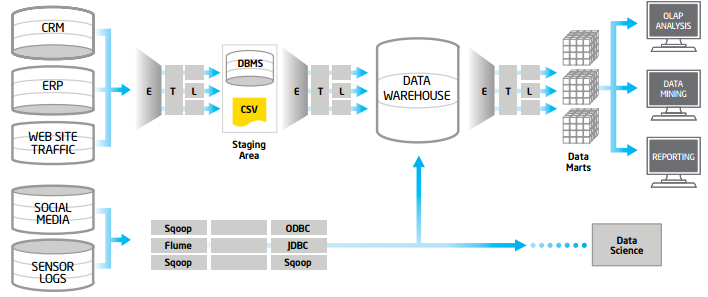

Data migration for many is just like uploading files on the internet. Well, it isn’t only about uploading. Companies tend to pay less attention to data migration activity, whereas data is the backbone of any ERP. A considerable fraction of migrating systems goes into migrating data. Different ERPs have different data structures or tables, it requires mapping efforts to push data the way it is needed.

Data migration is a combination of many tasks, namely planning, identifying and removing data redundancy, data hygiene, mapping, and importing/uploading. The result is significant and gives the true look and feel to an ERP. Implementers are equipped with a data migration skill set and help in pinpointing the effort estimate in this aspect.

- Discontinuing Legacy System:

The system which you were using before the advent of your new ERP is the legacy system. Many companies conveniently forget to discontinue the legacy system. Any system comes with a cost, and continuing it for no reason means an unnecessary cost to the company. Some organizations think of legacy systems as a backup plan, on which they can fall back on. But the reality is that the fallback system never shows the true picture. Once a new ERP comes on board, the legacy system is not updated, and transactions stop flowing in unless deliberately entered. The best recommendation is to keep a single version of the truth, many versions can be delusive.

If you are looking forward to implementing an ERP Solution, You can consider Bista Solutions for ERP Implementation services. We are partnered with leading ERP Solutions including Odoo – Open Source ERP, and Ramco, For more information, you can consult our experts at sales@bistasolutions.com and call on the USA: +1 (858) 401 2332